![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/1ddd8ba841cef96a8665a6c218d869267d379596-1076x500.gif/trigger_tap.gif)

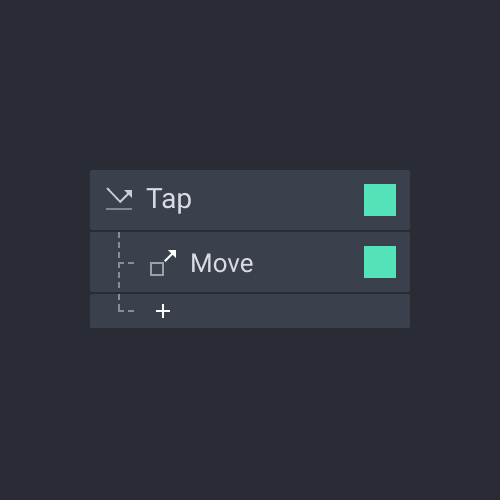

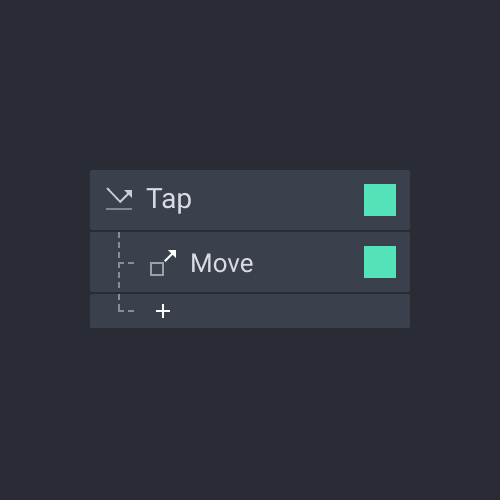

In ProtoPie, a Trigger is an event that triggers specific actions, called Responses, in your prototype.

A touch trigger involves actually touching the display of a smart device. It can be, for example, a Tap, Long Press, or Drag action. Multi-touch gestures, such as Pinch and Rotate, are also supported.

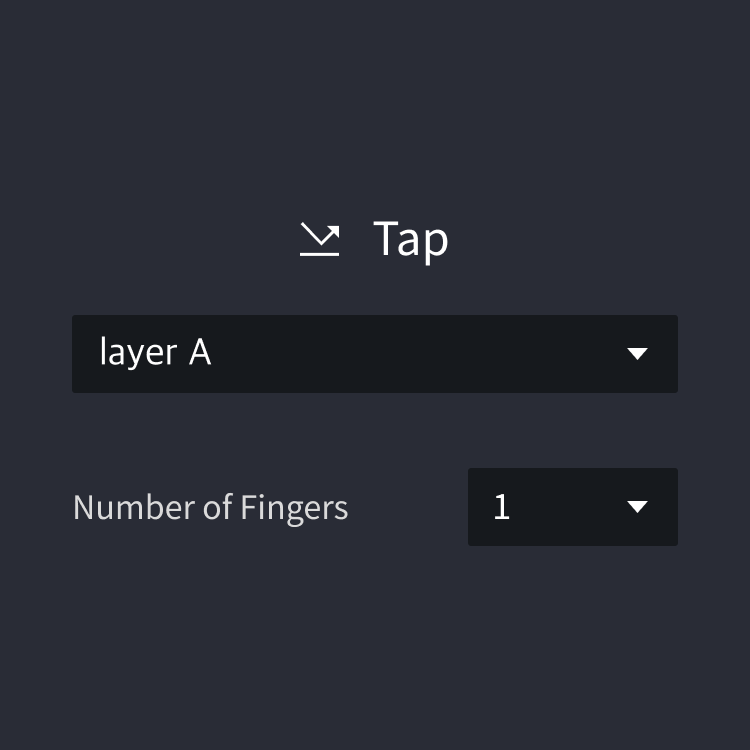

An action where the tip of a finger touches the touchscreen and is raised immediately.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/1ddd8ba841cef96a8665a6c218d869267d379596-1076x500.gif/trigger_tap.gif)

Up to five fingers supported

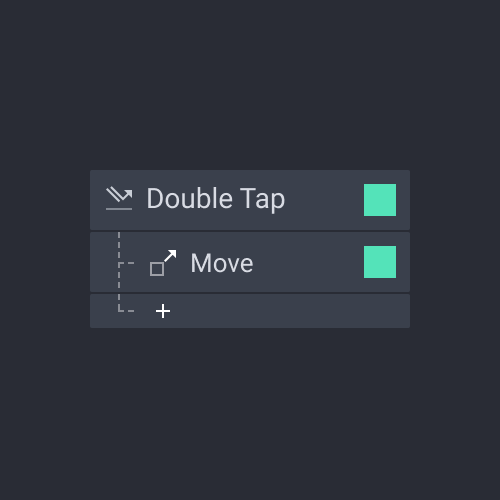

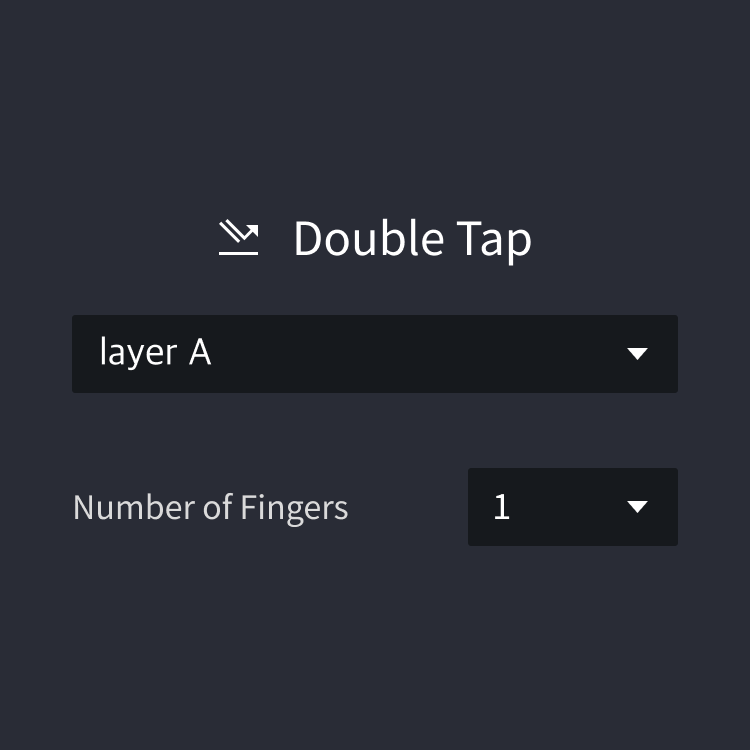

An action where the tip of a finger touches the touchscreen twice rapidly.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/44f38ac6c38212331cbd35ee6140348c0da02240-1076x500.gif/trigger_doubletap.gif)

Up to five fingers supported

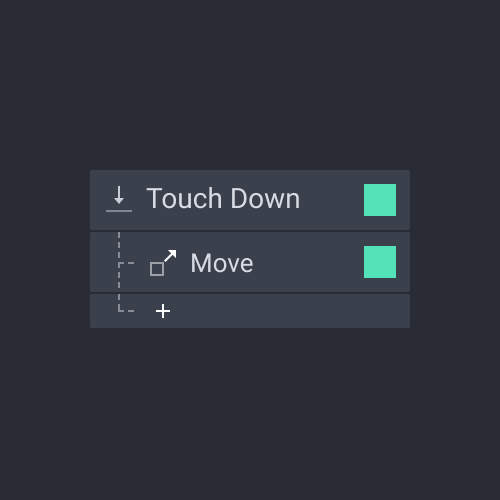

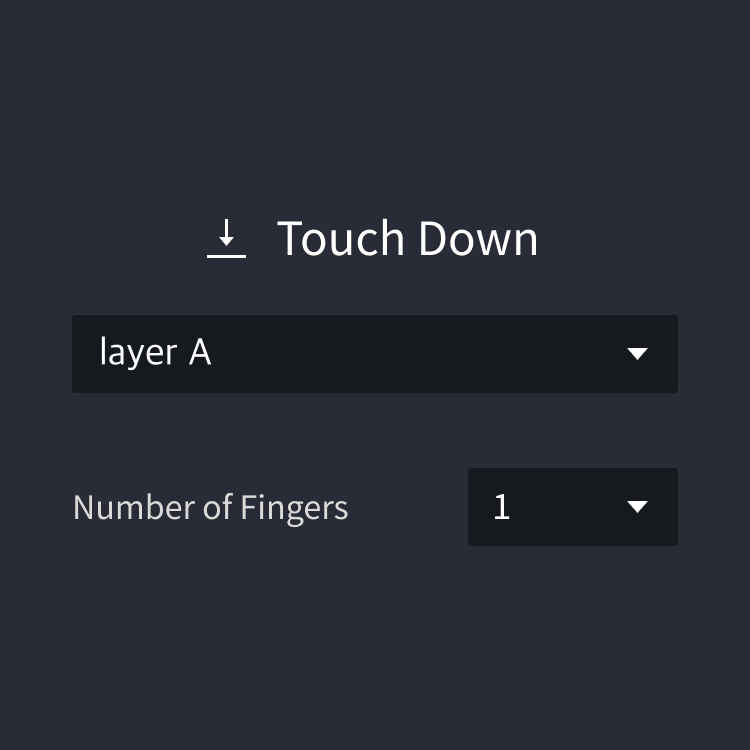

An action where the tip of a finger touches the touchscreen.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/7d724909786f35d792462818ba8375b823899f3c-1076x500.gif/trigger_touchdown.gif)

Up to five fingers supported

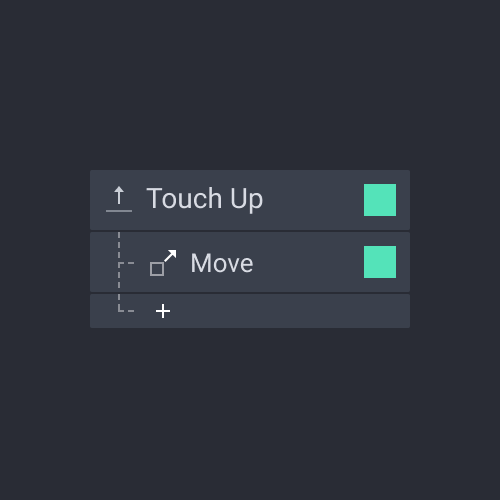

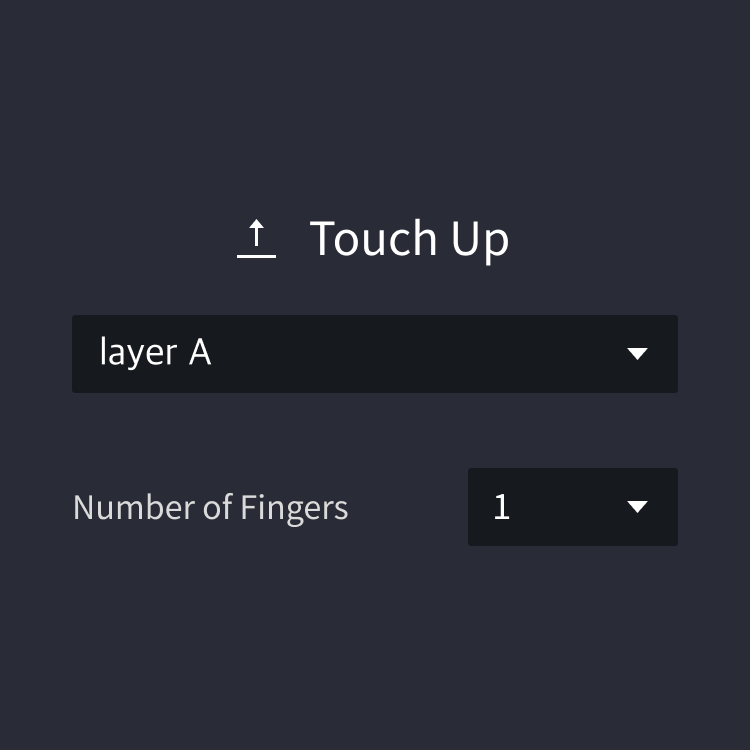

A response is triggered as soon as a user releases a layer. For example, it can be used in combination with drag to initiate an interaction when a user drags and releases a layer.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/5a30b4cf657f72da8aab47e8ca0c6d1d5161788d-1076x500.gif/trigger_touchup.gif)

Up to five fingers supported

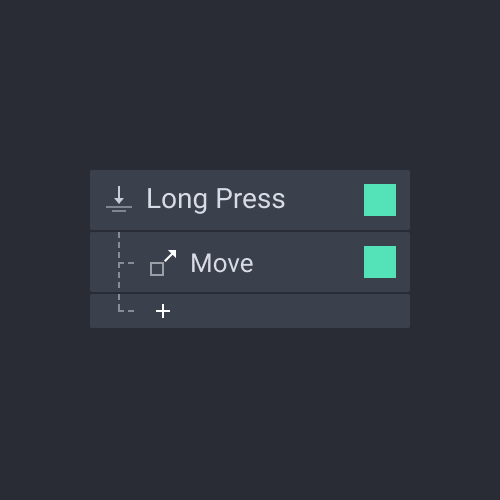

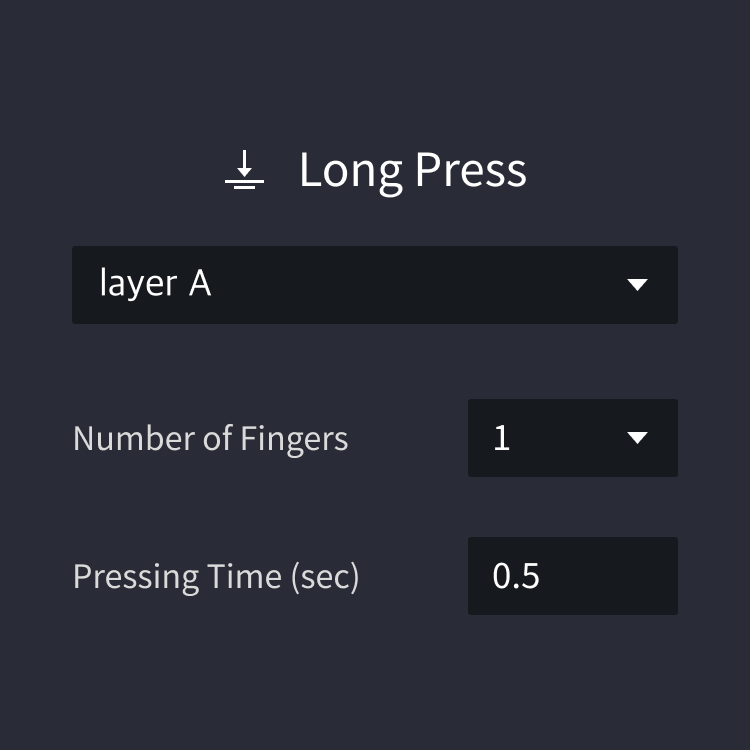

An action where the tip of a finger is raised after a certain amount of time touching the touchscreen.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/d0e8b787cc56f69ad6313020f25ba306160f5884-1076x500.gif/trigger_longpress.gif)

Up to five fingers supported

The amount of time during which a finger touches a screen

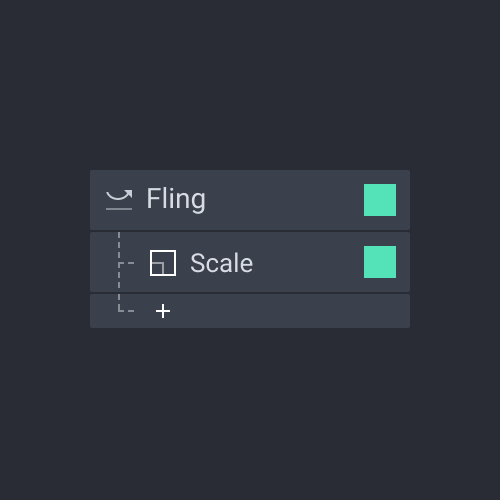

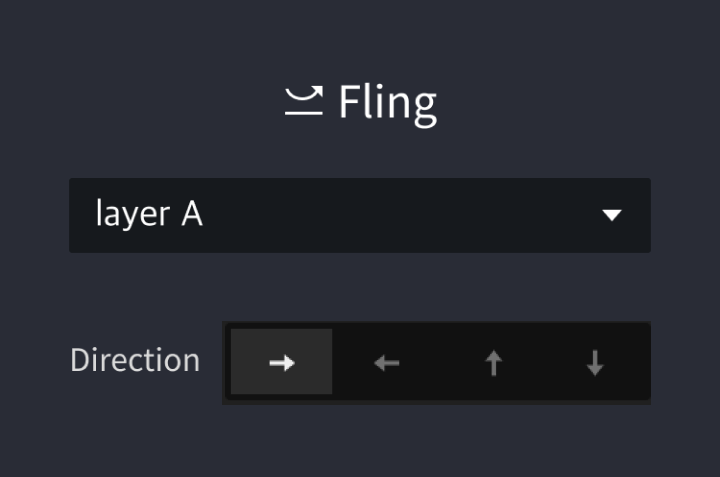

A response is triggered when a layer is swiped across the chosen direction, at a speed faster than the default speed.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/ef7c6b3afa59a93f736b814c9bffe55d869f9bbf-1076x500.gif/trigger_fling.gif)

The region towards which the finger moves

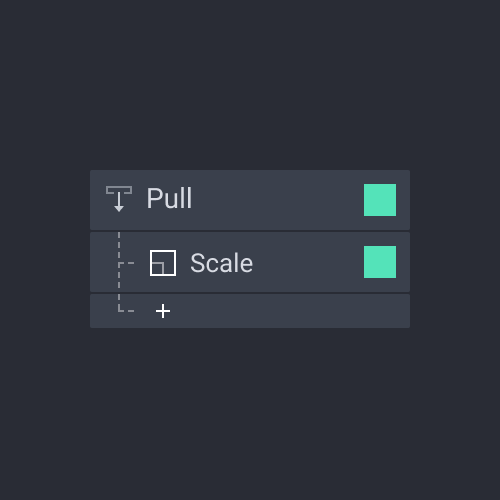

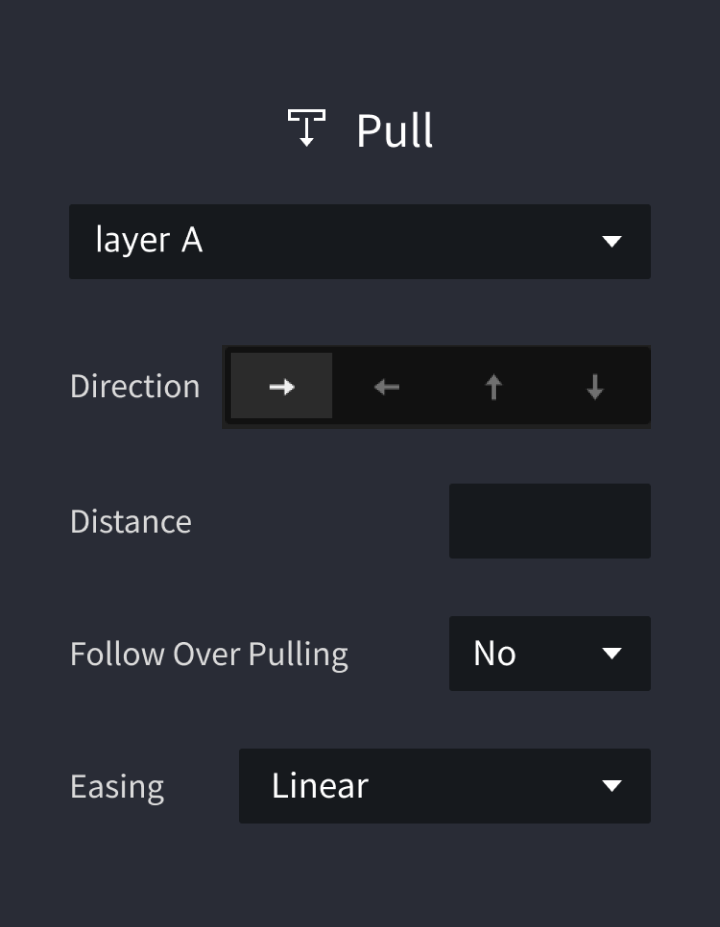

Pull is a trigger with true/false properties. If the target layer is pulled past a certain point, the layer moves according to the distance set by the user in the trigger's property panel. If the conditions aren’t met, the layer returns to its original position.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/aeb5e3e4b6ee120866297e2cae3cf213c6e0dbbf-1076x500.gif/trigger_pull.gif)

The region towards which the finger moves

The amount of space a layer moves

A reaction that takes place when a finger moves beyond a preset region

Change of acceleration of layer movement

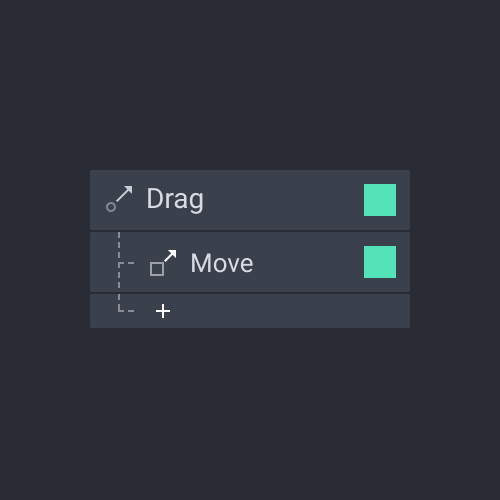

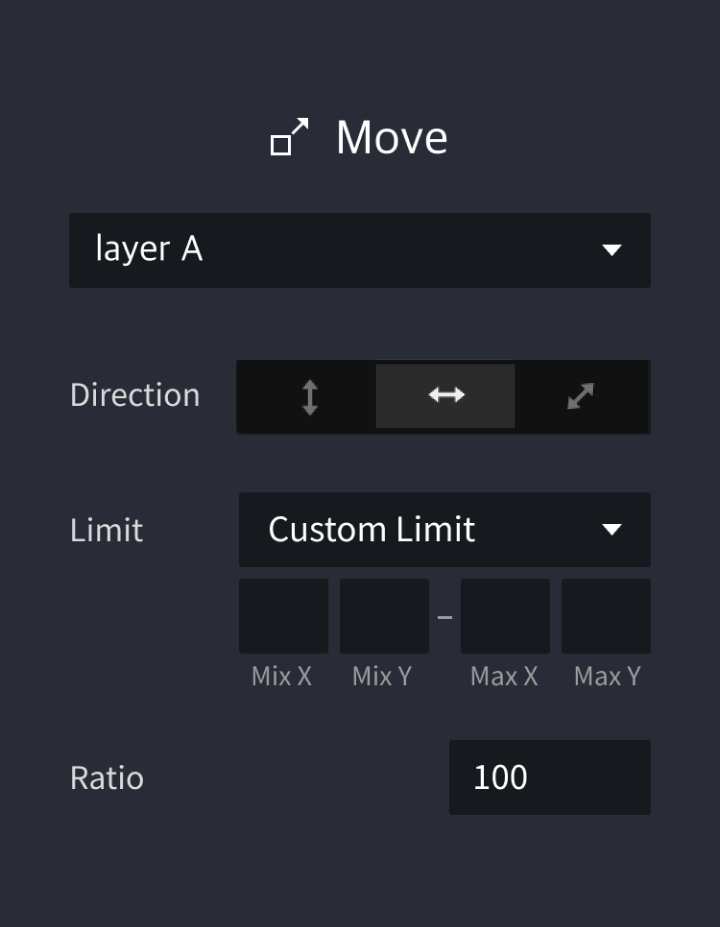

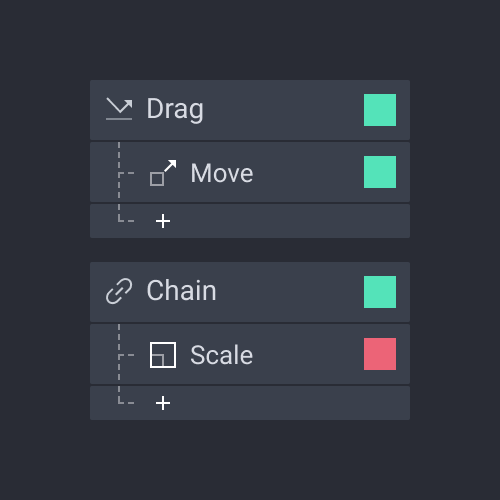

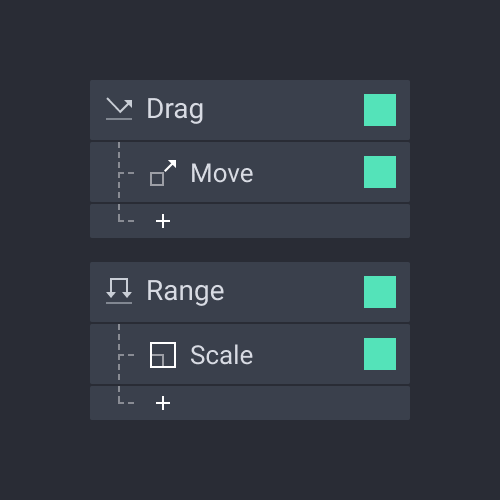

An action where the tip of a finger moves across the screen while touching the touchscreen.

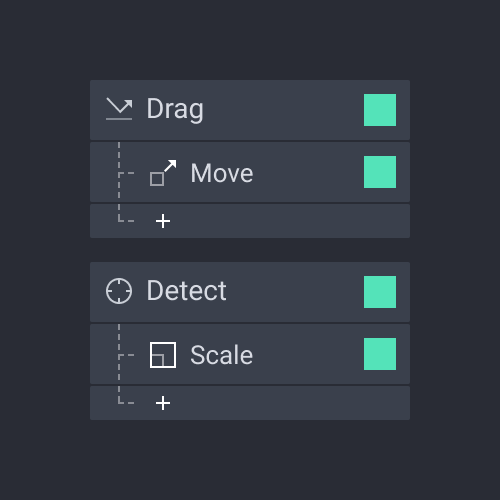

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/c697dcc2d7b2be579bbb5728a322971a8f8df91d-1076x500.gif/trigger_drag.gif)

Up to five fingers supported

The area towards which a layer moves

The minimum and maximum values under which a layer can move

The ratio between the distance a layer is dragged and the distance a finger moves on the screen. When the value is set to 100, the two distance values are equal in other words, a layer covers the same distance a finger moves. When the value is higher, the layer moves farther compared to the finger, and vice versa

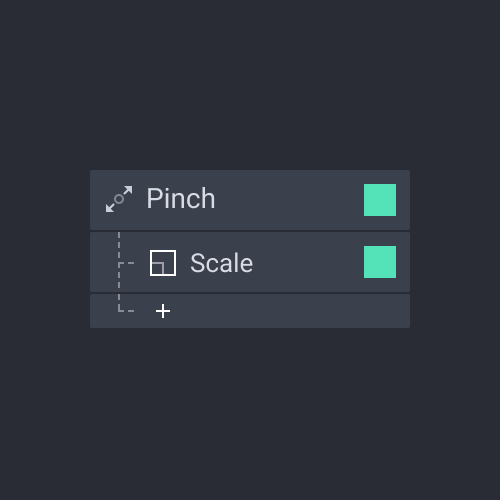

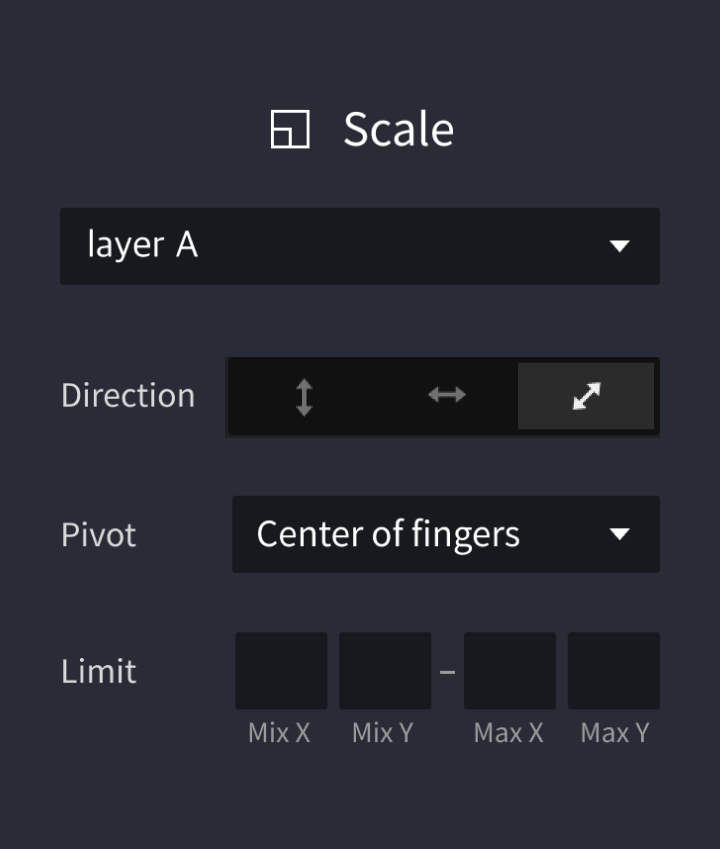

An action where two fingers pull away from or come toward each other while touching the touchscreen.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/4317a6deb90e50a937f50c73f98fa733203db309-1076x500.gif/trigger_pinch.gif)

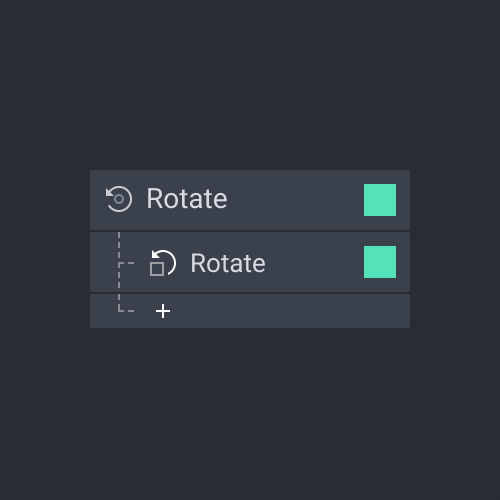

An action where two fingers turn in the same direction while touching the touchscreen.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/e325d10c97c88ffbce701b4d4a025c7fd4585eca-1076x500.gif/trigger_rotate.gif)

The reference point from which a layer undergoes rotation or resizing

As the name implies, conditional triggers activate Interactions based on specific conditions.

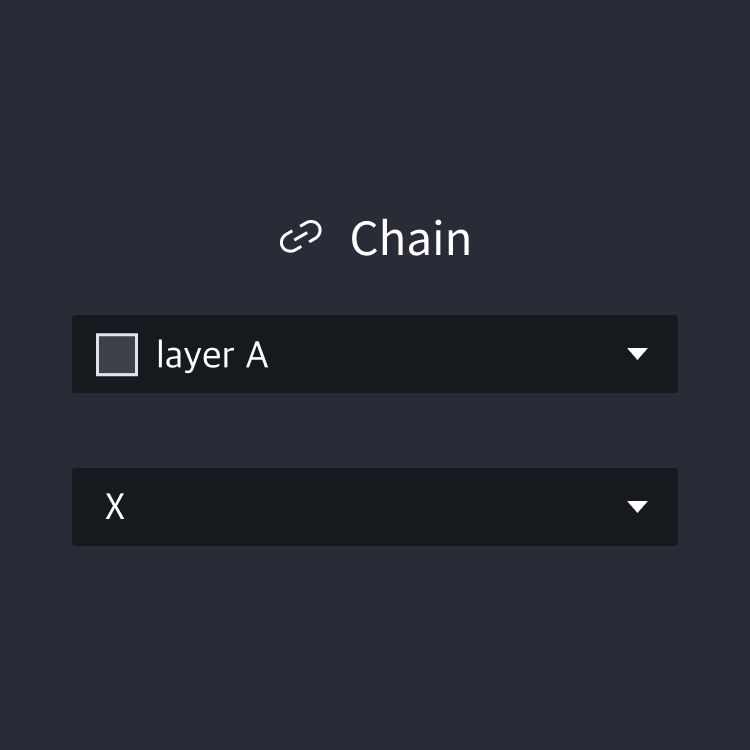

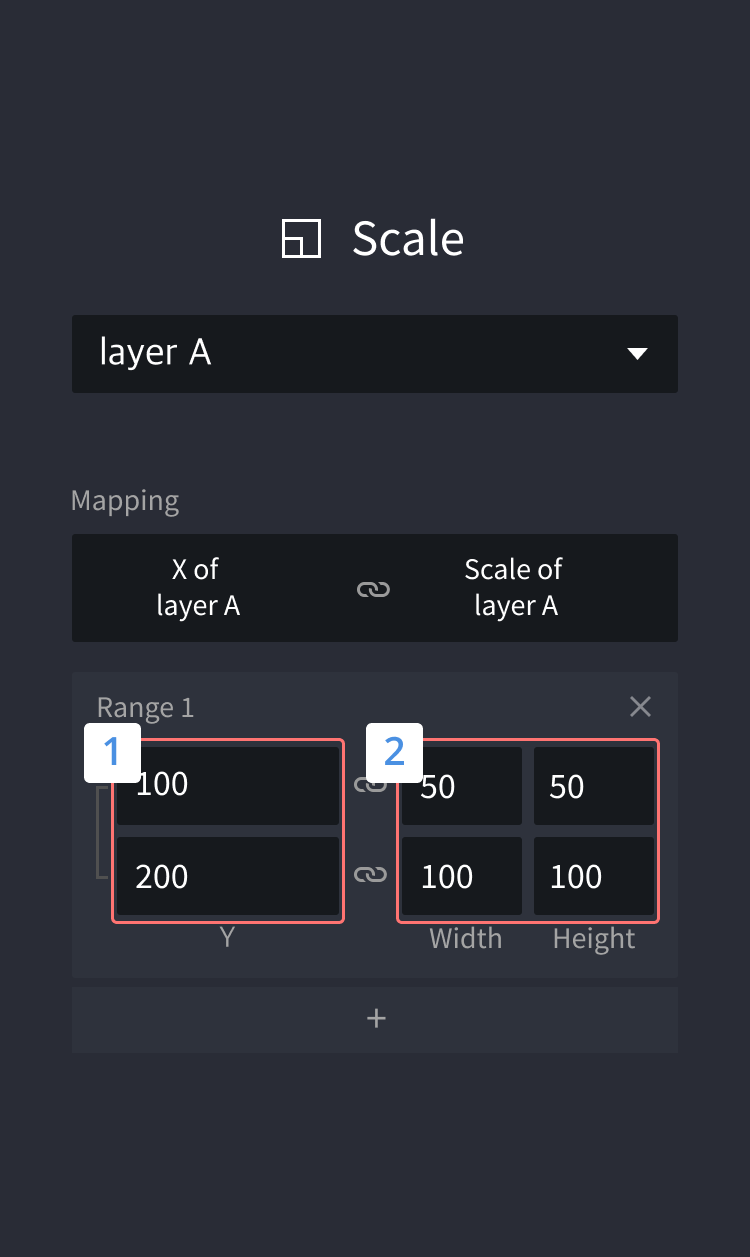

An action where the changes of a property of one layer changes the property of another layer.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/5c5de07eb4776c44585a65232346d7b904518ec9-1076x500.gif/trigger_chain.gif)

Layer attribute values as a reference for changing other layers

Trigger’s Layer Mapping Range 1

A movement range for a chain’s target layer

Response’s Layer Mapping Range 2

The range of values for a layer that will move within the movement range for a chain’s target layer

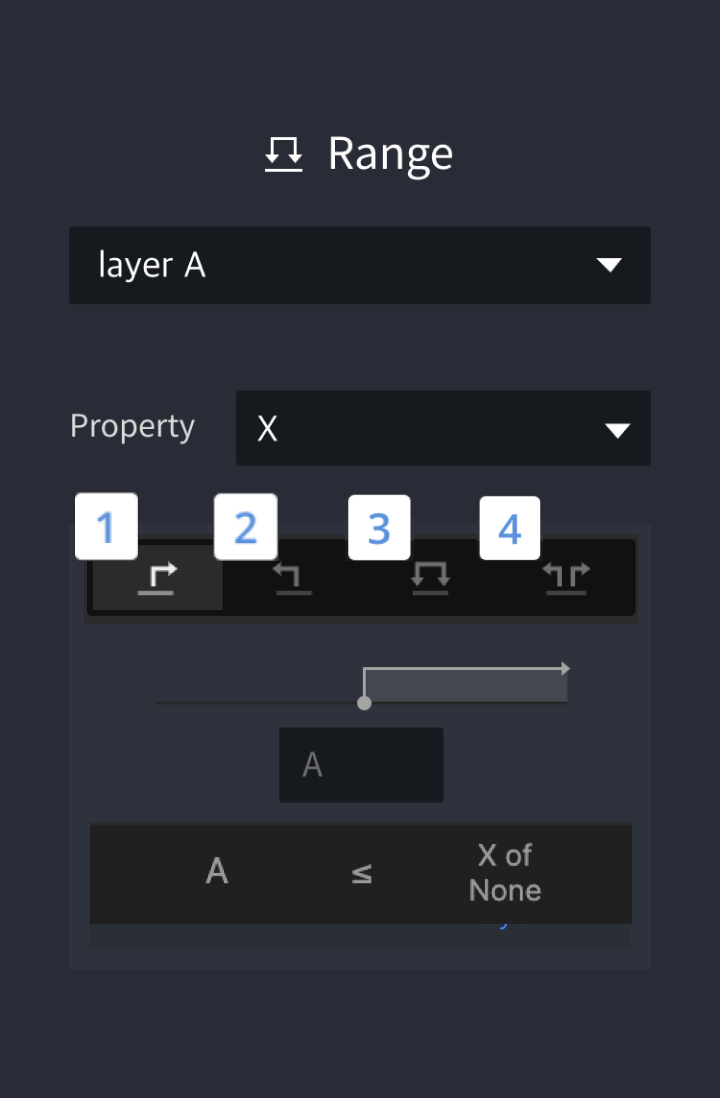

The Range trigger fires when an object’s property or variable transitions into a range (hence the name) you define. This trigger will only fire once as the property transitions into the range. For example, you might define a Range trigger that fires when the x property of an object becomes 200 pixels or greater. The trigger will fire once as the object transitions from 199 to 200. This won’t fire again as the x property remains 200 or greater, and it won’t fire when the property drops below 200 (e.g., 200 to 199). However, it will fire again if the property once again transitions from 199 to 200.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/93d5a5f33fa41cf56b4a321096f27cc921b06cac-1076x500.gif/trigger_range.gif)

1. Greater than or equal to

When a target layer’s value reaches a certain value

2. Less than or equal to

When a target layer falls under a certain value

3. Between

When a target layer’s value lies between two certain values

4. Not between

When a target layer’s value is outside the range between two certain values

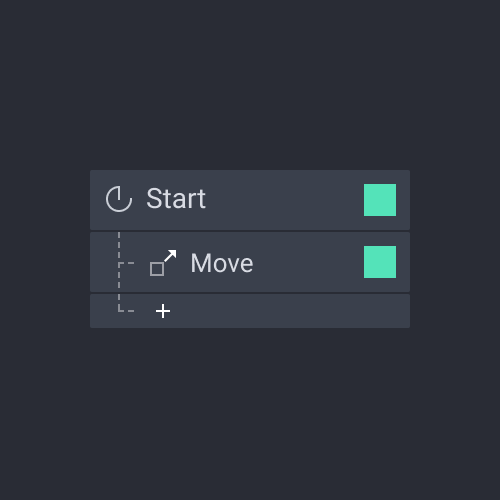

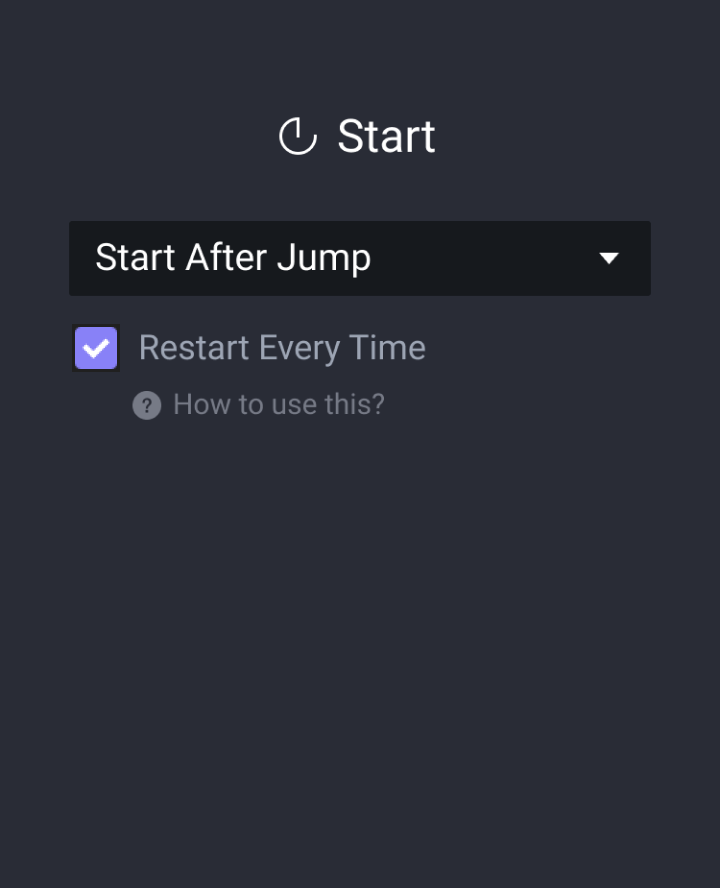

Start allows you to activate interactions upon loading a certain scene.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/8b573a7d199cf9e3106a822fbd7cf2dc2b6257bf-1076x540.gif/trigger_onload.gif)

Start activates right after a Jump response is executed.

Start activates together with the Jump response.

Start activates every time the active scene is loaded.

A response is activated when a layer property or variable changes.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/1b0df136acb09272010146037edcc79ee4ad1f45-1076x500.gif/trigger_change.gif)

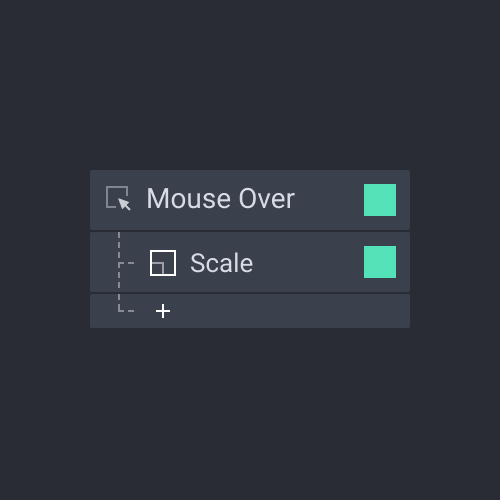

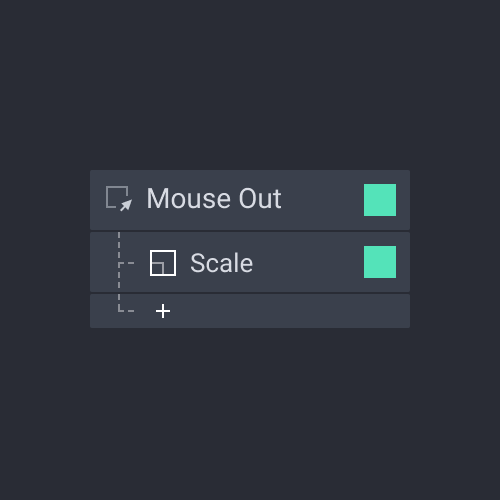

Mouse triggers activate based on the movement of a computer mouse. They allow you to create interactions based on the cursor hovering over or leaving an object.

A response is triggered when the mouse pointer moves over an object.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/3e9afac60723705afed4de24a37e0c73874eb542-1076x500.gif/trigger_mouse_over.gif)

A response is triggered when the mouse pointer moves away from an object.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/9df25f980c17ce30f2039cd5af6ea83b6d9f6ad3-1076x500.gif/trigger_mouse_out.gif)

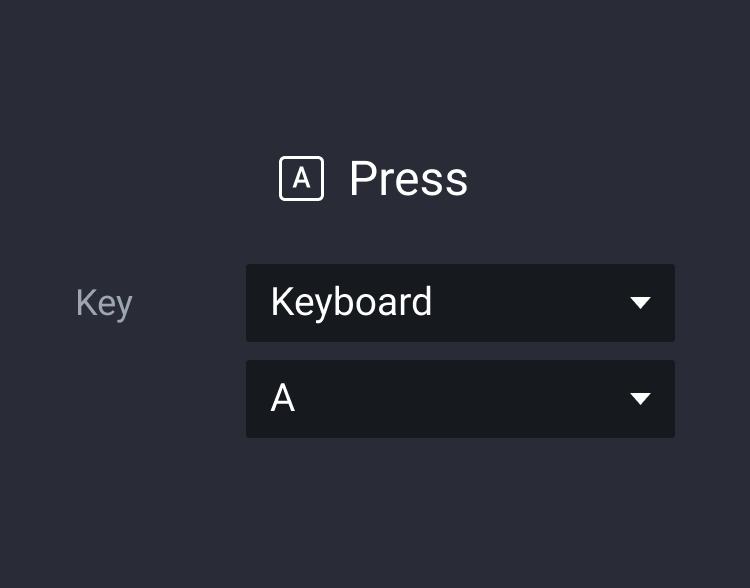

A response is activated when a key on a physical keyboard or an Android device is pressed.

The input trigger must be used with an input layer.

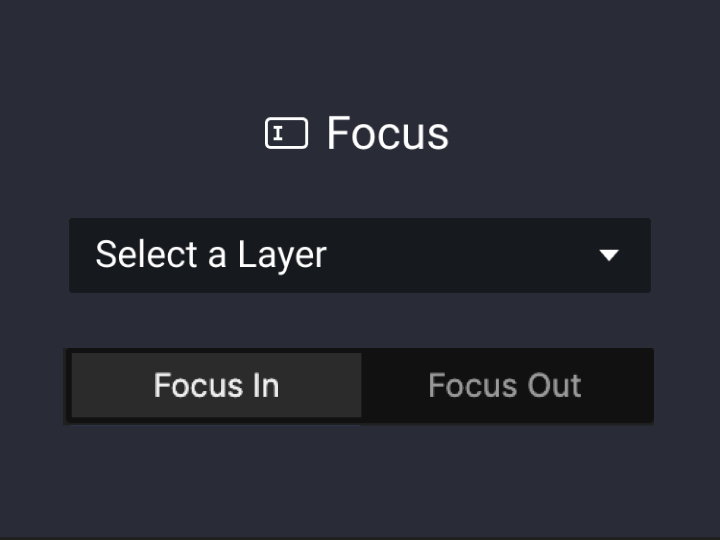

A response is activated upon an input layer receiving or losing focus. A Focus In event implies that the blinking placeholder is visible in the input layer, or that the native keyboard appears if a smart device is used. A Focus Out is simply the opposite.

A response is activated upon pressing the return key on a physical keyboard, or a native keyboard if a smart device is used.

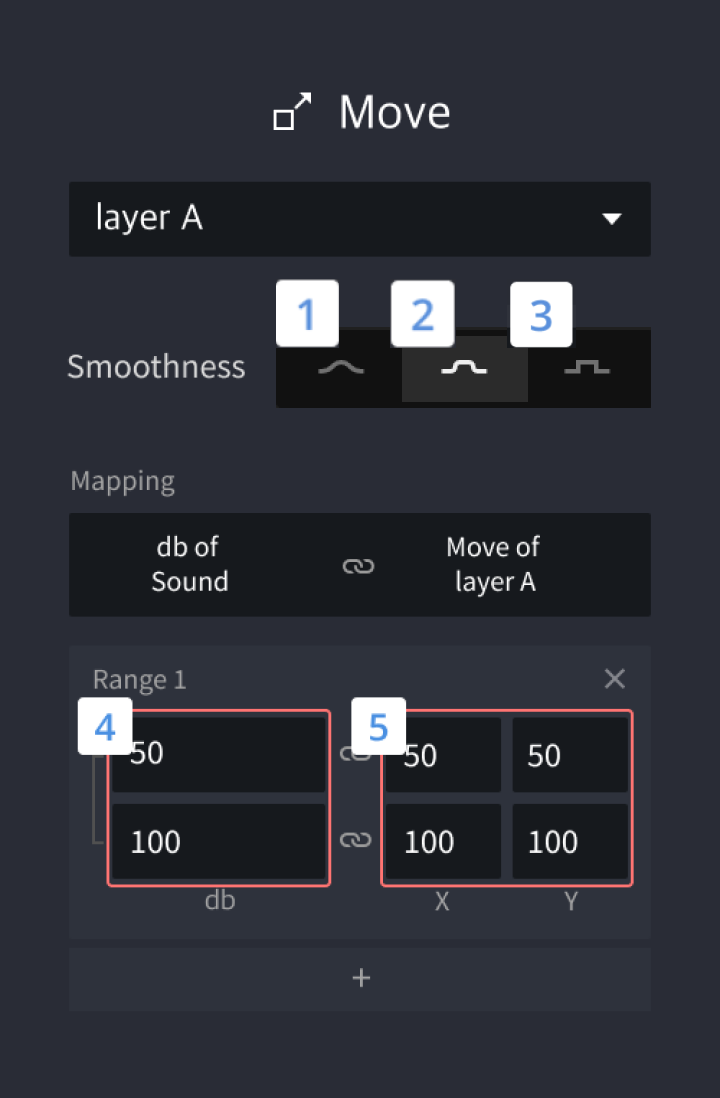

Sensor triggers enable accessing specific native sensors in smart devices and mapping responses onto their properties.

It's used to smoothen the layer movements mapped to specific sensor values. Three smoothness levels are available, from lowest (1) to highest (3)

The range of the sensor's values

The range of the layer's properties mapped to the sensor values

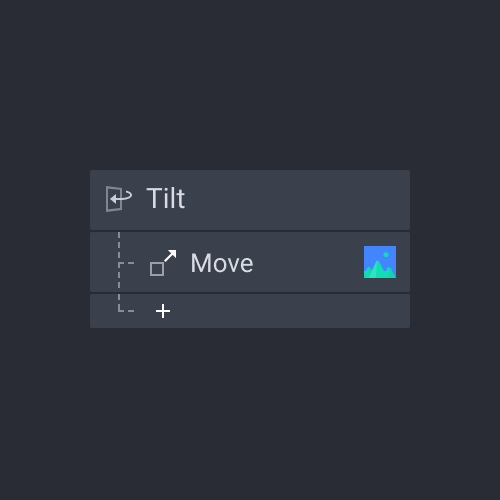

A response is activated upon a smart device reaching specific tilting angles.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/276eb8efe706e62733abf07e3589f33052ea7b7e-1076x540.gif/trigger_tilt.gif)

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/276eb8efe706e62733abf07e3589f33052ea7b7e-1076x540.gif/trigger_tilt.gif)

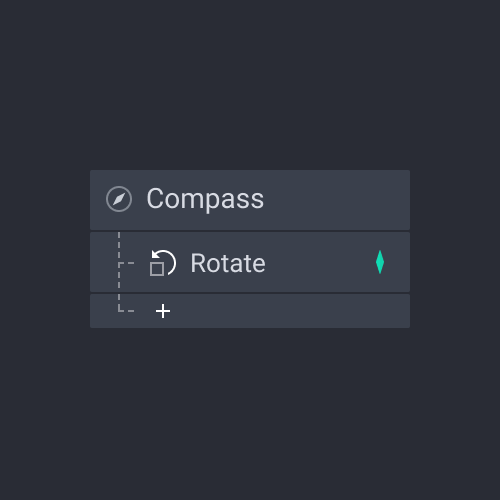

A response is activated based on the direction the smart device is pointing towards.

For example, to create a realistic compass prototype like this one, Compass is used with the Rotate response. The movement of the needle (Angle) is then determined by the detected compass angle (Degree), a value between 0 and 360, and the set rotation direction (clockwise/ counterclockwise).

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/aeb03c1ad2aac596f5300ac97d2b352bb80cff8b-1076x540.gif/trigger_compass.gif)

A response is activated based on the volume of a detected sound.

Learn how to use this trigger is used in the Mobile Game prototyping masterclass.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/ca2b0c4be14ab351a8e95451015d681b1fb446b0-1076x540.gif/trigger_sound.gif)

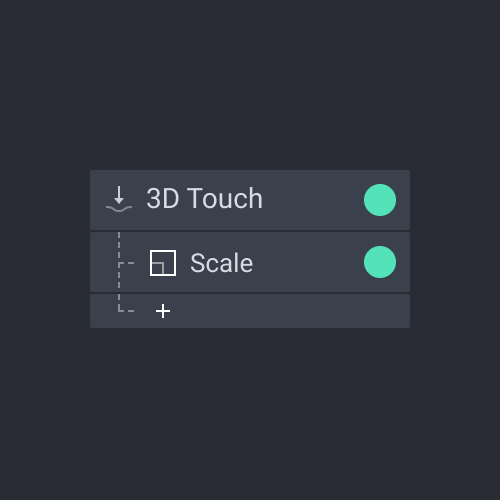

A response is activated based on the intensity of a touch force. The value of the touch force can range from 0 to 6.7.

Note that 3D Touch is only supported by older Apple devices such as iPhone 6s, iPhone 6s Plus, iPhone 7, iPhone 7 Plus, iPhone 8, iPhone 8 Plus, iPhone X, iPhone XS, and iPhone XS Max.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/fb3bce14b51d9310c072c3bb1760184ef0c01cf4-1076x540.gif/trigger_3dtouch.gif)

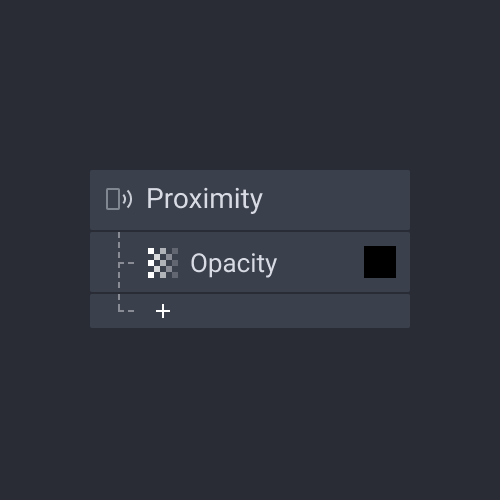

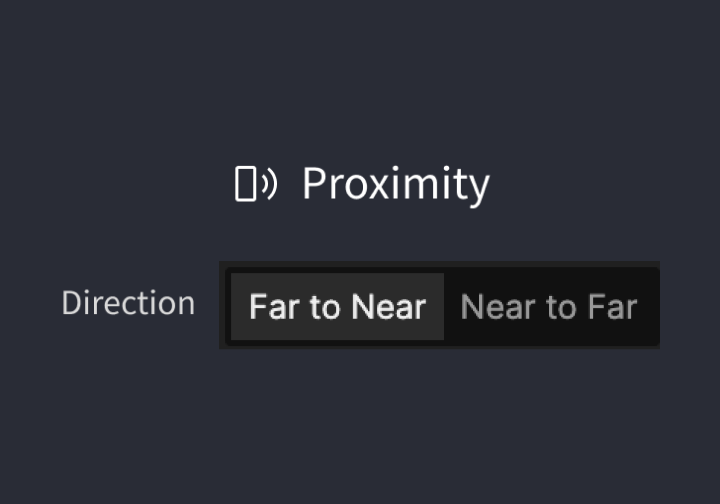

It's used to create interactions based on how close or far something is from the smart device's proximity sensor.

![[object Object]](https://cdn.sanity.io/images/vidqzkll/production/baeac49d06402876de34eaa523673b01c514c34e-1076x500.gif/trigger_proximity.gif)

A Response activates if the device moves closer to a physical object

A Response activates if the device moves away from a physical object

Receive triggers make interactions among devices possible. They must be used together with Send responses. A response is activated when a device with the Receive trigger accepts a message sent from a different device using a Send response. The message received on one device should match the one sent from the other device.

Send and Receive messages can be used within the same scene to modularize interactions or reuse a set of responses, avoiding repetitive work.

Inside the component, you can use the Send response to send a message and this can be received by a Receive trigger outside the component. This also works the other way around. Refer to Components for more information.

Select ProtoPie Studio as a channel to allow interactions among devices (it works the same way for ProtoPie Connect).

To modularize interactions or reuse a set of responses avoiding repetitive work, you can use Receive triggers and Send responses in one scene.

A message is a string that is transmitted. When the message in the Receive trigger on one device matches the message in the Send response, interactions among devices can take place.

It is possible to send a value together with a message. This value would have to be assigned to a variable upon receiving.

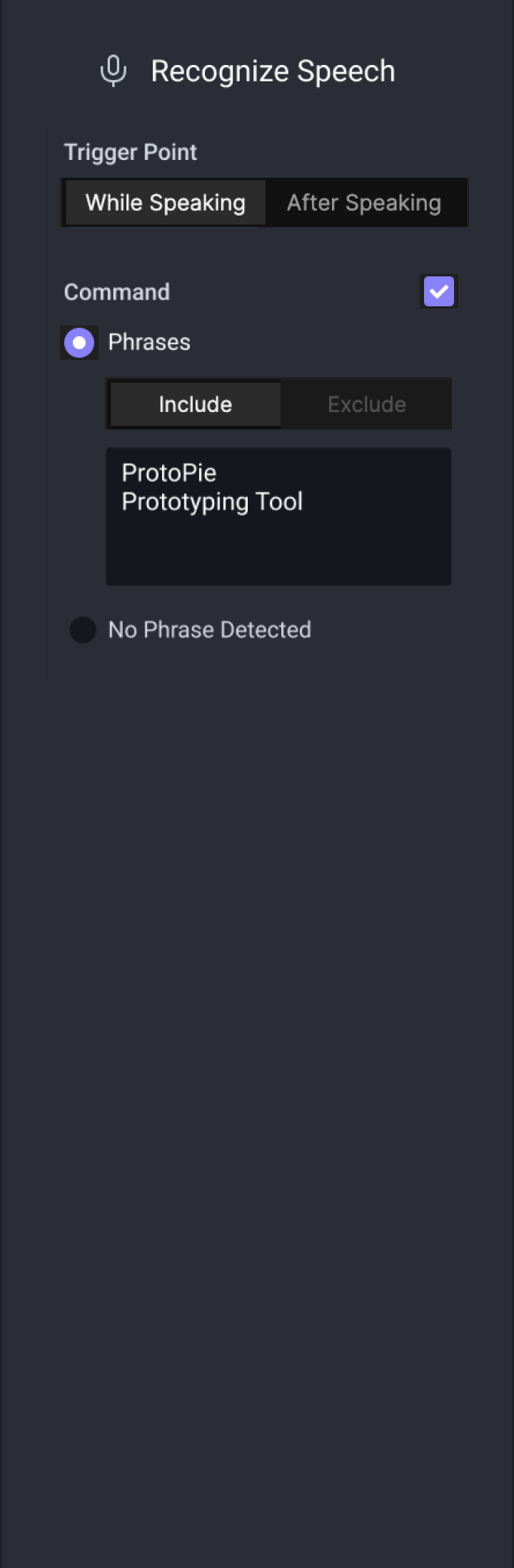

The Voice Command trigger enables triggering responses based on voice commands. You can set the Voice Command trigger to be triggered either while someone is speaking or after someone finished speaking. It's possible to include or exclude specific phrases within the commands.

In order to use the Voice Command trigger, you need to enable listening using the Listen response.

Learn more about voice prototyping.

When a speech is no longer detected, meaning when you stop speaking. This trigger point does not work when Continuous has been activated in the Listen response.

When a speech is detected, meaning when you start speaking.

The action triggers only if the detected voice command includes one of the listed phrases. You can enter various words, phrases, or sentences and separate them using line breaks.

The action triggers only if the detected voice command does not include any of the listed phrases. You can enter various words, phrases, or sentences and separate them using line breaks.

This means that the incoming speech does not contain any phrases. It could be due to background noises or other sounds that cannot be interpreted as human language.